Taking the SAT with the Breakout Expert from Operation Varsity Blues

Akil Bello schools me on a test I first took 34 years ago

Helping students prepare for taking the SAT/ACT is one of the many things that Educational Endeavors does as part of its mission to aid students in navigating the systems of education, while preserving their energy and enthusiasm for learning.

It’s been a long time since I took a college admissions test (1987), and I’m sure some things have changed. I figure, if I’m going to write about the challenges today’s students face, I should be more informed about the specifics of those challenges. In high school, I was one of those kids who benefited greatly from test scores that outstripped my grades. My combined SAT score was over 1400, with my verbal a little higher than my math.

As a teacher of writing, and because I’m terrified to see how low I would score on the math, I figured I’d start with the verbal section.

So that the integrity of the test would be unquestionable, and because I knew I’d have a lot of questions about the history and development of the test, I asked my friend Akil Bello to administer and score it for me.

When it comes to high stakes college admissions tests, Akil Bello has seen and done it all, from teaching for the Princeton Review in the 1990s to working as Founder and CEO of test prep company Bell Curves later on, to now serving as Senior Director of Advocacy and Advancement at FairTest, where he “works to build resources and tools to ensure that large scale assessment tests are used responsibly and transparently to benefit students.”

Akil Bello is also the breakout star of the recent Netflix documentary, Operation Varsity Blues: The College Admissions Scandal, in which you can find him dispensing wisdom about the dysfunctional system of college admissions.

Drum roll please…

I scored a 660, not bad, but a drop of 60-80 points from my peak performance 34-years-ago, answering 47 out of 52 correctly on the Reading section and 38 out of 44 on the Writing & Language section. I think I must’ve made every rookie (or out-of-practice veteran) mistake possible.

After getting the results, I asked Akil to answer some questions about the test and debrief on some of the things I did wrong.

John Warner: My memory of the SAT taking it before college (1987) was a lot of vocabulary and analogies in the verbal section. Am I misremembering, or has the test changed that much over time?

Akil Bello: You took SAT v15.1. The test is iterative and has changed its content and style many times. In its original form it tested Latin and Greek. Vocab was the primary driver of the verbal portions from probably the 50s to the 90s. Now the Reading is just that with very little out of context vocab, and there is a writing section that has evolved from the TSWE in the 80s to the SAT Subject Test style of the 2000s, to the current blatant plagiarism of the ACT style writing section.

JW: I read a lot of books because my mom owned a bookstore, so I had a good vocabulary, which led to my good score, I think. As you see it, what specific skills are being assessed in the Reading and Writing & Language test sections of the current test? What do students who score really well know?

AB: The Reading Test tests your ability to read with a pedantic specificity and paraphrase with flexible precision. The Writing Test assesses the ability to apply about 10 particular grammar rules (use of semicolons, modifiers, subject-verb agreement, colons, etc.) in a wide array of ways and constructions. Above all nuanced descriptions, the Reading and Writing Tests test the ability to focus on rules above convention in specific, pedantic ways.

JW: That’s interesting because a phenomenon I’ve noticed is that when doing the initial peer reviews in my first-year writing class, when students exchange their work with a classmate, the “best” students (meaning the ones who report very high grades and test scores), tend to focus their critiques on those conventions as opposed to looking at the text holistically for argument, evidence, style, etc. They’ve received signals previously that the conventions come first, and it seems to be what they default to when responding to a text. I have to guide them toward a different way of thinking.

AB: I’m not surprised. The SAT creates that focus, and if school curriculums shifted in 11th and 12th grade to align with the SAT/ACT, then they’ve likely spent a lot of time in the past two years thinking about technical minutia rather than holistic meaning.

JW: While taking the test, I struggled with a number of things just in terms of interpreting or interacting with the test. For example, in the questions that ask to interpret particular subsets of a reading passage (e.g. lines 42-48), I had a hard time focusing on and comparing the different choices. Is there a hack or technique for that?

AB: Yup. I think a significant part of taking the test is preparing for it. Learning its nuances and foibles. For the example you cite, my bet is that you misinterpreted the lines given to mean that the answer was in those lines, rather than that they were citing what they were asking about, and the answers are more than likely (like 95% likely) going to be before or after (but close to) the lines cited.

JW: I also realized I biffed a couple of questions because I didn’t understand exactly what the question was asking. There was one question in the Reading section (42) that asked for which lines provide the best “evidence” for the answer of the previous question. I made the mistake of thinking that it was asking about the evidence for the argument of the entire piece, rather than just the previous test question. I should have known better because none of the passages I had to choose from would qualify as evidence of an argument. They’re all claims.

AB: That’s really interesting. I’ve never thought about it that way. I don’t think any of the high school students I work with would make that mistake either, in part at least because, if you’re used to these tests, you accept that they have a language and style of their own that has a strange relationship to the real world. These tests have an internal logic that is often at odds with our expectation if you’re looking for consistency or alignment with external logic.

JW: On another question (33 in Writing & Language), I thought they were asking which of the choices would work best after the existing last paragraph as a conclusion, but instead they were asking if any of the choices would be better than the existing conclusion. Would I get fewer of these wrong with practice as I recognize how the questions work? Are there types of questions that tend to trip up test takers?

AB: I find that the entire formatting of the Writing Test requires getting used to, from questions that have no actual question (just choices) to questions that ask users to do a really specific random thing. This is one of the reasons that preparation for these tests is crucial in order to maximize your performance.

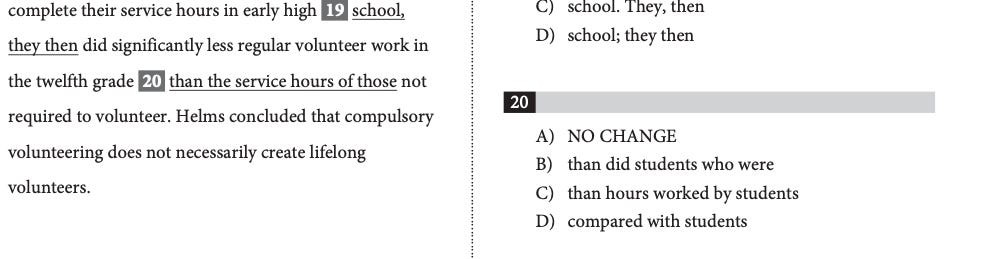

JW: For question 20 on Writing & Language, I prefer my answer (D), stylistically, to the “correct” one (B). (I wouldn’t flag either of them in a piece of student writing.) It just sounds better to me. I’m of the Joan Didion “Grammar is a piano I play by ear,” school of thought. What distinction is the test making? Why am I wrong?

AB: I probably should google Joan Didion, since this is the second time in the last couple years it’s come up and I have no clue what it means (the first was in the song I Saw A White Lady Standing On The Street Just Sobbing from Netflix’s John Mulaney and Sack Lunch Bunch). Anyway, this question is about the idiomatic construction of “less...than” as opposed to “less...compared with.” Keeping in mind that the focus of the SAT is on specificity and technical correctness rather than simply conveying information clearly then the answer makes a lot of sense.

JW: What strikes me about the underlying values the test conveys about writing is that its insistence on “correctness” saps writing of the stuff that audiences actually respond to — voice, style, energy. Whatever virtues I have as a writer, and whatever success I’ve had is in my willingness to be “me” on the page. This is perhaps incompatible with school, but it’s a benefit in the wider world to be able to play with language in a way that’s appropriate to the audience.

AB: I think the issue is standardized tests are inherently limited to technically correct things. It can’t test anything that is built on nuance or writer’s preference because then you cannot claim one answer is correct above the others. That’s likely the reason the Oxford comma is not tested in even cases where you can argue that meaning would require the use of the comma. A good multiple choice test avoids anything that is ambiguous or debatable, which may to some also make that same test a bad multiple choice test since it becomes very pedantic.

JW: I also made two transcription errors between the test booklet and the bubble sheet. If the test mattered, and I cared about my score, I’m hoping I would’ve used the extra time to double check, but how common is it for a score to be skewed by this kind of mistake?

AB: Almost no student ever has time to double check. I help students make sure they don’t make mistakes the first time, otherwise there is no way to catch it. Most students never catch any transcription mistakes, only a few even see the questions after they take the test, so it would be exceedingly hard to catch your errors. Back in 2011, I knew that I’d made a transcription mistake on the official exam and paid $55 to have my test hand-scored and was “awarded” 10 more points (with no explanation as to what happened).

JW: I finished with about one-third of the test time remaining. What should I have done with that time?

AB: I’d have two approaches for a test-taker like you: 1. Slow down and spend more time from the start to avoid making mistakes or 2. Put a star next to any questions you’re uncertain of so that you can easily find them at the end and go back to review them. I prefer the former strategy and find it more effective generally.

JW: Just by now being familiar with the test, I feel like I could boost my score a couple of notches, but I feel like I could take a dozen practice tests and never score perfectly. It’s almost as though there’s questions specifically designed to trip up the test taker. Am I wrong?

AB: Sort of. It wouldn't be much of a test if all questions only had one choosable answer. To make a good/tough test all questions include reasonable, logical alternative answers that are incorrect. This is why test publishers beta test questions, to figure out whether the question functions as they want it to. The distribution of the percent of people selecting each answer choice (the p-value) is one of the metrics that is a big deal to test publishers and psychometricians. Consider the following answer distributions:

A. 1% of test takers selected

B. 6%

C. 4%

D. 89%

Now consider this one:

A. 11% of test takers selected

B. 16%

C. 24%

D. 49%

The second indicates a “better” question because there are more reasonable “distractor” answers that are enticing to test-takers who, to the logic of psychometricians, are of lower ability. And all of that is before I even get into “speededness,” which is another metric that is factored into test design.

JW: Okay, now you have to talk about “speededness.”

AB: In testing (psychometrics) speededness refers to the impact that timing has on performance and ability to complete the questions on the test. Generally, timed tests are described as power tests, moderately speeded tests, or highly speeded tests. I believe the metric used is something like, if the time allowed leads less than 90% of test takers to complete the test, it's considered highly speeded. If I’m not mistaken, SAT version 14.0 was calibrated so that 85% of test takers would reach 75% of the questions in a section. Some research on speededness is here. Think of it as regular chess versus speed chess and the impact that would make on performance. Many people would perform wildly differently under those different timing rules.

--

Thanks to Akil for an interesting and enlightening experience, and if you’re looking for test prep that’s humane and holistic, check out what Educational Endeavors is up to.

I have a lot of thoughts about the disconnect between what this kind of test values and what is typically valued in the kind of college writing courses I spent two decades teaching, but that will have to wait for a future post.

I guess I also should probably suck it up and take a swing at the math section. I’m terrified to see how dumb I’ve gotten.